publications

publications in reversed chronological order.

2023

-

Converting Depth Images and Point Clouds for Feature-Based Pose EstimationRobert Lösch, Mark Sastuba, Jonas Toth, and 1 more authorIn 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Oct 2023

Converting Depth Images and Point Clouds for Feature-Based Pose EstimationRobert Lösch, Mark Sastuba, Jonas Toth, and 1 more authorIn 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Oct 2023In recent years, depth sensors have become more and more affordable and have found their way into a growing amount of robotic systems. However, mono- or multi-modal sensor registration, often a necessary step for further pro-cessing, faces many challenges on raw depth images or point clouds. This paper presents a method of converting depth data into images capable of visualizing spatial details that are basically hidden in traditional depth images. After noise removal, a neighborhood of points forms two normal vectors whose difference is encoded into this new conversion. Compared to Bearing Angle images, our method yields brighter, higher-contrast images with more visible contours and more details. We tested feature-based pose estimation of both conversions in a visual odometry task and RGB-D SLAM. For all tested features, AKAZE, ORB, SIFT, and SURF, our new Flexion images yield better results than Bearing Angle images and show great potential to bridge the gap between depth data and classical computer vision. Source code is available here: https://rlsch.github.io/depth-flexion-conversion.

@inproceedings{Loesch2023, title = {Converting Depth Images and Point Clouds for Feature-Based Pose Estimation}, author = {L{\"o}sch, Robert and Sastuba, Mark and Toth, Jonas and Jung, Bernhard}, booktitle = {2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, year = {2023}, pages = {3422-3428}, doi = {10.1109/IROS55552.2023.10341758}, issn = {2153-0866}, month = oct, url = {https://rlsch.github.io/depth-flexion-conversion/}, }

2018

-

Design of an Autonomous Robot for Mapping, Navigation, and Manipulation in Underground MinesRobert Lösch, Steve Grehl, Marc Donner, and 2 more authorsIn 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Oct 2018

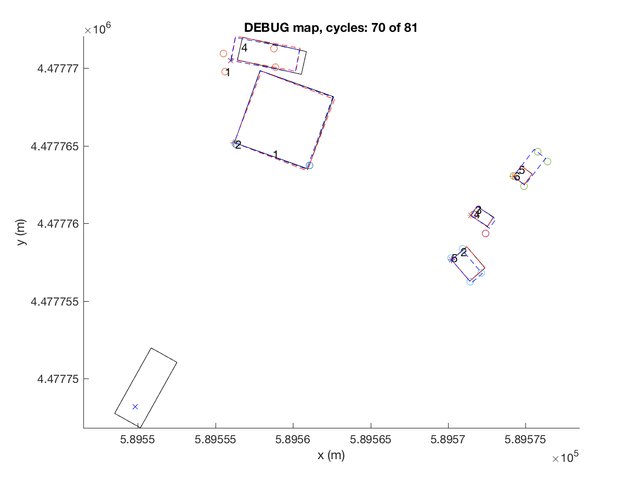

Design of an Autonomous Robot for Mapping, Navigation, and Manipulation in Underground MinesRobert Lösch, Steve Grehl, Marc Donner, and 2 more authorsIn 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Oct 2018Underground mines are a dangerous working environment and, therefore, robots could help putting less humans at risk. Traditional robots, sensors, and software often do not work reliably underground due to the harsh environment. This paper analyzes requirements and presents a robot design capable of navigating autonomously underground and manipulating objects with a robotic arm. The robot’s base is a robust four wheeled platform powered by electric motors and able to withstand the harsh environment. It is equipped with color and depth cameras, lighting, laser scanners, an inertial measurement unit, and a robotic arm. We conducted two experiments testing mapping and autonomous navigation. Mapping a 75 meters long route including a loop closure results in a map that qualitatively matches the original map to a good extent. Testing autonomous driving on a previously created map of a second, straight, 150 meters long route was also successful. However, without loop closure, rotation errors cause apparent deviations in the created map. These first experiments showed the robot’s operability underground.

@inproceedings{Loesch2018, title = {Design of an Autonomous Robot for Mapping, Navigation, and Manipulation in Underground Mines}, author = {L{\"o}sch, Robert and Grehl, Steve and Donner, Marc and Buhl, Claudia and Jung, Bernhard}, booktitle = {2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, year = {2018}, pages = {1407-1412}, doi = {10.1109/IROS.2018.8594190}, issn = {2153-0866}, month = oct, }

2017

-

Multitarget multisensor motion tracking of vehicles with vehicle based multilayer 2D laser range findersRobert LöschJan 2017

Multitarget multisensor motion tracking of vehicles with vehicle based multilayer 2D laser range findersRobert LöschJan 2017For tracking, autonomous vehicles often use 3D laser range finders (LRFs), which are expensive. In order to make autonomous cars affordable as a mass product, we use multiple, more affordable 2D LRFs for tracking and implement an algorithm with the goal of achieving a similar performance. Our tracking algorithm comprises the following steps: Data preprocessing, segmentation, classification, feature extraction, data association, and track management. To compensate the information reduction, we re-use track information and focus on reducing over-segmentation and effects of the shape change problem. We test the algorithm on own labeled data and training data sets of “The KITTI Vision Benchmark Suite” with the CLEARMOT and MT/PT/ML metrics. In addition, we test specific properties with specific scenarios. Our algorithm obtains a MOT accuracy, which reflects the amount of correctly detected objects, of 0.79 on our own and negative values on the KITTI data sets. Obtained MOT precision, which is an averaged detection precision, is around 60 percent on all data sets. Out of all tracks, 47.83 percent are mostly tracked on our own data and zero percent on KITTI data sets. Our algorithm for tracking multiple objects with multiple moving 2D LRFs does not reach 3D performance. Improvements of feature extraction and especially classification to distinguish between vehicle and non-vehicle objects would boost its performance.

@mastersthesis{Loesch2017, title = {Multitarget multisensor motion tracking of vehicles with vehicle based multilayer 2D laser range finders}, author = {L{\"o}sch, Robert}, year = {2017}, month = jan, school = {TU Munich}, address = {Munich, Germany}, pages = {89}, language = {en}, keywords = {multitarget multisensor tracking LRF LIDAR DATMO MOT}, type = {Master's thesis}, }

2016

-

Can automated road vehicles harmonize with traffic flow while guaranteeing a safe distance?Matthias Althoff and Robert LöschIn 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Nov 2016

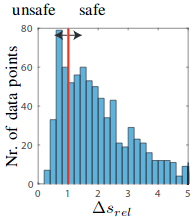

Can automated road vehicles harmonize with traffic flow while guaranteeing a safe distance?Matthias Althoff and Robert LöschIn 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Nov 2016A frequently raised argument against safely-driving automated vehicles is that they would not harmonize well with traffic flow-unrealistically large headways would invite other traffic participants to cut in and thus put passengers of following automated vehicles at risk. In order to test this hypothesis, we use real data of thousands of vehicles recorded in the United States as part of the Next Generation Simulation (NGSIM) program. To study the hypothesis, we pretend each human-driven vehicle is automated: These automated vehicles drive exactly as the recorded human drivers, but they have a much smaller reaction time and thus can still drive safely in situations that are unsafe for human drivers. The main result is that in only very few cases an automated vehicle would not drive safely, although more than half of the human drivers do not keep a safe distance according to their capabilities. Thus, there is no increased risk of vehicles cutting in-and if they do, automated vehicles are only at risk in around 10% of all cases compared to around 60% for human drivers.

@inproceedings{Althoff2016, title = {Can automated road vehicles harmonize with traffic flow while guaranteeing a safe distance?}, author = {Althoff, Matthias and L{\"o}sch, Robert}, booktitle = {2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC)}, year = {2016}, pages = {485-491}, doi = {10.1109/ITSC.2016.7795599}, issn = {2153-0017}, month = nov, }